Intro

It’s me again. It looks like it’s been over 3 years since I’ve made a blog post (wow, time flies!). First of all, I’m sorry about that. I don’t know if it’ll make up for it, but this post is one that I’ve wanted to write for quite a while.

Today I want to talk about automating whatever compliance checks you might be required to adhere to for any computers you manage. Depending on what you do and what requirements you have, the title may seem like it’s insanely obvious. If, like me, you’re held to the standards of something like DISA’s STIGs, then this might be a pretty useful post for you (and you might not believe me).

Some Background

If you don’t know what a Security Technical Implementation Guide (STIG) is, don’t worry too much about it. They’re just guides that detail settings and requirements that computers have to adhere to, and there’s different ones for different OSes and applications (they actually apply to more than computers, but we’ll stick to them for today’s discussion). Fully automating them is a hard problem for at least the following reasons:

- You have to know which ones apply to which systems

- Some of the checks require documentation, e.g., "Only approved individuals can have administrator access," or "All installed features must be documented with the site."

- There’s a whole bunch of them, and if/when you think you’ve evaluated all of your systems, most of the STIGs have been updated

- There’s more, but you probably get the picture

STIGs aren’t anything new to me as a sysadmin, and I’ve always strived to figure out ways to automate applying them and evaluating them. I’ve got some stories that I’d love to tell you about implementation wins (even before I knew what PowerShell was), but implementation is actually pretty simple for the vast majority of the Windows STIG checks now, and the stories would be boring (some of that’s because the OS made improvements, and some are because the STIGs got a little bit better). The harder thing now is finding systems that aren’t implemented properly for whatever reason, e.g., Group Policy is messed up (either server-side, or something’s wrong with the client), something’s wrong with your configuration management client, etc. With thousands of checks per system, how do you know if one systems has slipped through the cracks?

Anyway, a few years ago, I had to provide proof of compliance on a bunch of systems. Like 100% compliance for 100% of the checks for a decent number of systems. In the STIG world, you usually do that with CKL files (these are XML checklists that are used with DISA’s StigViewer utility). I also became aware of the fact that this was going to be the new normal for all of the systems I managed.

If you’re familiar with STIGs, then you’re also familiar with SCAP (Security Content Automation Protocol). The problem is that SCAP isn’t capable of 100% STIG evaluation (at least not to my knowledge). It is pretty cool, and part of a complete compliance solution, though. The way it works is you get SCAP content, which are like definitions for different STIGs (STIGs use something called XCCDF to define those), and you feed those to a SCAP compliance validation program. The validation program knows how to read the SCAP content, and how to enumerate and evaluate against different clients. There are tons of SCAP validation programs out there, including stand-alone programs and components of larger programs that can tie in with larger configuration and/or vulnerability management solutions. The output generated by the validation program can be used to give you nice reports if you’re using something like Nessus or SCCM, or you can take the raw files and convert them into the CKL format.

SCAP wasn’t going to be enough, so over the course of a few months, after a mix of some good and bad decisions, a design for automating everything sort of took shape, and a PowerShell module called StigTester was born (pretty original name, huh?).

StigTester

The path to StigTester had a decent number of turns and false starts, but the way it exists in its current form really took shape about three years ago.

I know there are other automation solutions out there, but I’m not sure any of them go as far as StigTester (if there is such a solution that’s out there, please let me know so I can correct this section). The unique thing about it (I think) is the way it’s split into different components:

- The engine

- The STIG repository

- The test repository

- Applicability tests

- Test environments

- Check tests

- The documentation repository

- Other utilities and tools

It being split up that way makes it much tougher to explain exactly how it works, but I promise, it makes it capable of succeeding where other solutions have failed (here I’m talking about my other failed automation endeavors, not others out there that may very well work better than what I’m going to describe). The structure is actually the magic here, and if you understand that part, you can go make a version that’s definitely way better than StigTester is today in whatever language you like.

Instead of boring you with design details from the start, let me just walk you through what setting up, running, and maintaining StigTester looks like for a single system, and take a shallow peek under the surface for each of those views. I usually dive too deep when explaining stuff like this, and it doesn’t help anybody 🙂

Setup

Let’s imagine you’re starting your own StigTester distribution. To do that, you’d start with the module files, but with no entries in any of the three repositories (stick with me here). Running an evaluation on a system wouldn’t do anything, because the repos are empty.

Let’s say you want to implement the Windows 10 STIG (I don’t know the current version/release, but let’s say V1R10 is the current one for our example). You’d go to DISA’s site and get the latest XCCDF STIG file and copy it into the STIG repository.

Having the STIG in the STIG Repository is a start, but you have to have something in the Test Repository for any CKLs to be generated during an evaluation phase. The structure of the repo isn’t important, because you interface with it via a helper command called New-StigTesterDefinitionScript:

PS> New-StigTesterDefinitionScript "Windows 10"

# Tab completion kicked in for that command :)

That makes a template that’s ready to be run to inject all of your tests into the test repo (if you run it at this point, it won’t do anything). You open it up in your editor of choice, and you fill in at least the first entry, which is the applicability test. That’s where you put the code snippet that will return $true if the STIG is applicable, so it’ll look something like this:

New-StigTesterTestRepoItem -Id d587384ab01ff13d3bf25ba9c299a0c0cde5a6e3 -Type ApplicabilityTest {

$CS = Get-CimInstance Win32_ComputerSystem

$OS = Get-CimInstance Win32_OperatingSystem

$CS.DomainRole -in 0, 1 -and $OS.Version -match '^10\.'

}

If you fill that part of your template in, then run it, then you’ll actually see some action when you try to generate CKLs during an evaluation phase. Any Windows 10 system you evaluate would generate CKL files that have all of the checks set to ‘Not Reviewed’. That might not seem like much, but if you can grab all of those files (there’s nothing stopping you from having StigTester write its results to a network share so you already have everything together), then you can actually use some other helper utilities in the module to parse the CKLs and create an applicability matrix (NOTE: Don’t use StigTester just to make an applicability matrix–keep going and write some tests)

We’re not going to cover it in this example, but you might want to create a test environment to hold any functions that other tests may use. A couple of scenarios that jump to my head:

- Firefox (at least in the past) wanted to know about plugins and file open actions, and some of that requires you to look into the profiles on the system. Your Firefox STIG shared environment might go ahead and enumerate all of the profiles so that your tests can run faster without having to do that expensive operation multiple times. You’d probably want to go ahead and write some helper functions that know how to read the profiles and get whatever info you need out of them that the tests can use.

- The .NET Framework has a handful of checks that want you to look through the whole computer for certain types of files. You could make a shared environment for the STIG that looks through the computer for these special files once, stores the locations, and then have the tests look there.

There is a common shared environment that all of the STIGs have access to that has some of the most common types of checks you might encounter.

Back to our example: now you get the unpleasant task of going through your template and filling out tests. This sounds worse than it is for the vast majority of checks, though (and rest assured that even for really nasty checks, you only have to write the test once, and then you reap infinite rewards). That might look something like this:

New-StigTesterTestRepoItem -Id abcd384ab01ff13d3bf25ba9c299a0c0cde5a6e3 -Type Check {

# Assume this is a registry check

$Params @{

Path = 'HKLM:\SOFTWARE\Policies\Microsoft\Windows NT\SomeFeature'

Name = 'Enabled'

ValueType = 'DWORD'

ExpectedValue = 1

}

Assert-RegistryValue @Params

}

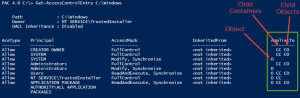

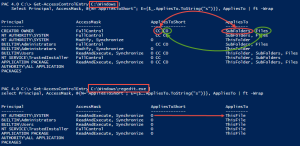

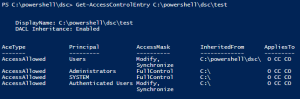

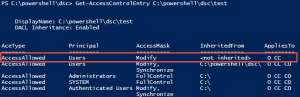

New-StigTesterTestRepoItem -Id ef12384ab01ff13d3bf25ba9c299a0c0cde5a6e3 -Type Check {

# Assume this is a permission check

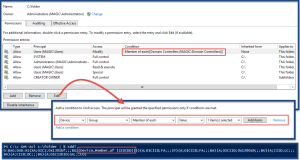

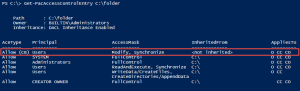

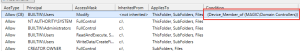

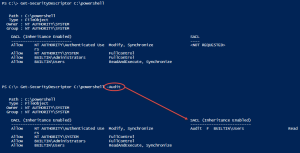

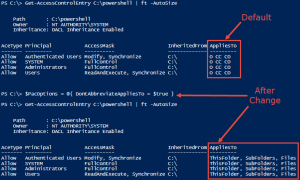

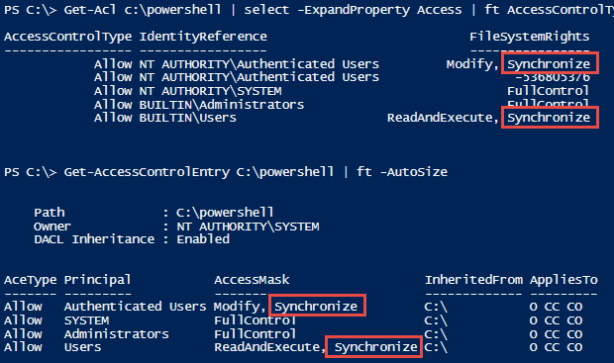

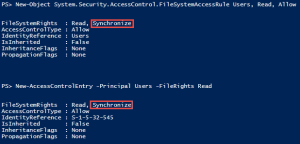

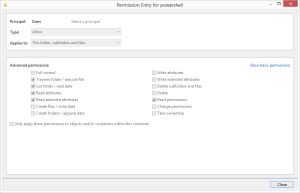

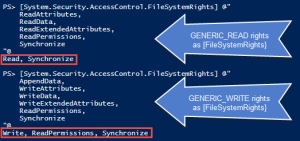

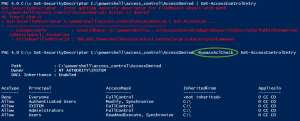

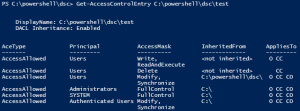

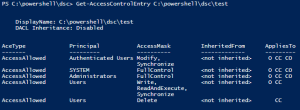

Assert-Acl "$($env:SystemDrive)\" -AllowedAccess "

'CREATOR OWNER' FullControl CC, CO

Administrators, SYSTEM FullControl O, CC, CO

Users ReadAndExecute O, CC, CO

Users CreateDirectories O, CC

Users CreateFiles CC

"

# NOTE: Assert-Acl is a powerhouse command. Internally, it's using Test-Acl, which you can see here: https://github.com/rohnedwards/TestAcl

# You should see that thing rip through AD object permissions for other STIGs :)

}

New-StigTesterTestRepoItem -Id 3456384ab01ff13d3bf25ba9c299a0c0cde5a6e3 -Type Check {

# Assume this is a process migitgation check (if you've ever tried to

# do one of these manually, you'll know how awesome this is)

# Take note of how you can put logic in to switch the check to N/A. You

# can set any status like this

$MinimumReleaseId = 1709

if ($Win10ReleaseId -lt $MinimumReleaseId) { # This was defined in the shared environment

Set-VulnTestStatus -Status Not_Applicable -Comments "Only applicable to ${MinimumReleaseId} and greater; current release ID is ${Win10ReleaseId}

return

}

Assert-ProcessMitigation -ProcessName wmplayer.exe -MitigationsToCheck @{

Dep = @{ Enable = 'ON' }

Payload = @{

EnableRopStackPivot = 'ON'

EnableRopCallerCheck = 'ON'

EnableRopSimExec = 'ON'

}

}

}

Notice the use of lots of helper commands. We’re not going to cover test writing best practices, but just know that doing it that way makes it way easier to change the detailed information written out to the CKL files. And believe me, it’s there. Want to know why that check passed or failed? It will be in your CKL comments, letting you know everywhere that was checked and why the assertion passed or failed.

Anyway, you, test creator/maintainer, went through and answered all of template sections you could, and then ran the template file to populate the test repository. Hopefully you’ve got your repos in some sort of source control, so it’s time to go ahead and commit your changes (the repos are all text at this point). Don’t worry if you didn’t write tests for everything–those checks will just show up as ‘Not Reviewed’ in your results, and even manual STIG evaluators will leave things for later. 🙂

We’re going to ignore the Documentation Repository for now, as this blog post is already going to be way longer than I wanted.

Evaluation

Let’s recap what was done in our example: the Windows 10 STIG XCCDF file was dropped into the STIG Repository, and then a template script was created so that an applicability test and checks could be added for that STIG into the Test Repository.

On your Windows 10 system that you want to evaluate, run this:

PS> Import-Module StigTester

PS> Invoke-StigTest

At this point, the engine will wake up and start enumerating through the STIG Repository. It will keep track of the latest version of each STIG that’s contained in it, and it will make a call to the test repository to get the applicability test. If you run this on a Windows 10 system, then the test will return $true, and then the engine will get each of the checks from the STIG and see if the Test Repository has a test made.

I didn’t mention this above when you were writing the tests, but the Test Repository keeps track of these tests based on an id generated by the actual ‘Check Content’ of the STIG check (it’s slightly more complicated than that, but just imagine it’s hashing the ‘Check Content’ for now). This will be important in the next section dealing with maintenance.

This is also the part where the Documentation Repository is consulted, too. We didn’t add any documentation, but know that the engine knows a whole lot about the system that’s being evaluated, and the Documentation Repository can contain small bits of information about checks that are scoped to systems based on an infinite number of properties, e.g., the domain, the computername, IP address, OS, etc. Again, documentation will have to wait (it’s insanely awesome, though)

In our example, there’s just the one STIG, but the engine would go through each one and create a CKL file with LOTS and LOTS of details. More than someone manually creating a CKL would ever put down.

Maintenance

That’s great, but what about when the STIGs are updated? No problem at all. Go get the newest XCCDF file, and drop it in the STIG Repository (leave the old one for now)

At this point, if you were to run a new evaluation, you’d get 100% accurate CKL files. Let’s assume you had V1R10 100% implemented, and then you drop V1R12 there (you missed an update–whoops!). When the evaluation occurs, any checks that didn’t change (remember that they’re tracked by their ‘Check Content’) are still in the repo. Any checks that were removed won’t be looked up, so they won’t be in the CKLs, any that stayed the same will be evaluated, and any new or modified ones will show as ‘Not Reviewed’. You might not have 100% of the checks implemented anymore, but you’re also not stuck trying to generate some new definition file from scratch, either.

To update, you do something that should look familiar:

PS> New-StigTesterDefinitionScript "Windows 10" -DontIncludeImplementedChecks

That switch makes it so you only get the checks that aren’t in the Test Repository. And since you left the old XCCDF file in the STIG Repository, your template will also show you extra info for the modified checks that existed in the previous version, along with the old test code (90% of updating STIGs is comparing the ‘Check Content’, seeing some note was added that doesn’t change the nature of the test, and copying/pasting the old test into the template.

Then you commit your changes to your source control and distribute the updated StigTester.

Back to the Design

I don’t know if any of that made sense. If it did, then hopefully you see the benefits of designing it this way:

- Doing anything that resembles coding can be kept separate from evaluating, so testers don’t have to be comfortable with any scripting at all.

- The actual engine has the hard core "coding", and it can be kept completely separate from STIG testing and documentation maintenance. This means that a site could just pull engine updates and maintain their own repos. At my site, I actually switched jobs, and StigTester’s engine has been working without modification for about a year, but the repos are being steadily updated.

- You can pick and choose which repos you want to use. For instance, imagine getting a STIG Repository from an external site, but maintaining your own Test Repository. You don’t have a choice on maintaining your own Documentation Repository, but you might choose to take someone else Test Repository, too.

- StigTester doesn’t currently do this, but this design allows you to "stack" different repos, too. That’s a different discussion…

It also scales really well, but that’s not due to the design. Automate anything to where you just run a command, and then scalability is almost completely solved for you since it just becomes a matter of "How do I deploy it?", and you should already have a mechanism for that since you’re having to update your software.

Where Do I Get a Copy?

Well, you don’t get a copy of it in it’s current form. So much of it was made on the job, I don’t even want to get into who owns what, and what process it would take to get it released. It could probably be released, but since it was designed on the fly, it’s sort of a mess. The engine hasn’t had any major changes in over a year (it might not have had any changes for almost a year), and even when I was using it daily, I had a whole list of improvements that could be made.

For that reason, I’m planning to revamp it and build it from scratch using some lessons learned. The new version, which I’m thinking of calling ComplianceTester, will (at least in theory) be capable of doing more than just STIG checks, and it’s going to work for Linux, too.

I’ve mostly implemented the code for the STIG Repository already (it’s now called the Guideline Repository). I should be able to throw what I’ve got up to my GitHub page soon.

The Documentation Repository

This really deserves it’s own post. StigTester in it’s current form doesn’t do this justice, but the current implementation is still one of the most useful parts of the whole system. This is the magic piece that can get you to 100% compliance.

If this post made any sense and you’re still interested, let me know, and I’ll dedicate a blog post to the topic.

Conclusion

I guess that’s about it for now. If anyone comes across this, please let me know if you have any questions, suggestions, etc. Does it make sense? Is there any part I should elaborate on (including the Documentation Repository)? Do you have some sort of compliance guide you have to follow that’s not a STIG that I can look at to make sure I’m keeping the new framework extensible and nimble enough to work with it? Would you be interested in helping with ComplianceTester?

Oh, I guess I should provide some metrics on what StigTester was doing when I was using it daily:

- I could get you about 500,000 checks in less than a day (that’s evaluated, analyzed, and visualized–visualization is a topic for another day)

- I want to say that the average workstation had something like 1,000-1,200 checks with all of their applicable STIGs, but I could be wrong. We essentially had 100% test coverage for them. We still had a few STIG technologies to implement on servers (we got all the big ones, and the ones we didn’t do were just a matter of not getting to them–I’ve never seen a Windows STIG I didn’t think could be automated)

- We didn’t talk about this today, but the engine has some tools that’ll let you ingest SCAP results and run it through a fake evaluation phase in order to merge documentation. We did that for some of our non-Windows OSes (yet another example of the Documentation Repository popping up again)

- We also rigged up a little solution for our network gear to take some non-PowerShell custom compliance evaluation results and run those through the engine’s fake evaluation phase, too. This helped us take custom output and turn them into CKLs, and it also let us use our documentation like on the non-Windows systems that still supported SCAP.